Beyond Good Vibes: Robust Benchmarks for AI Experiments

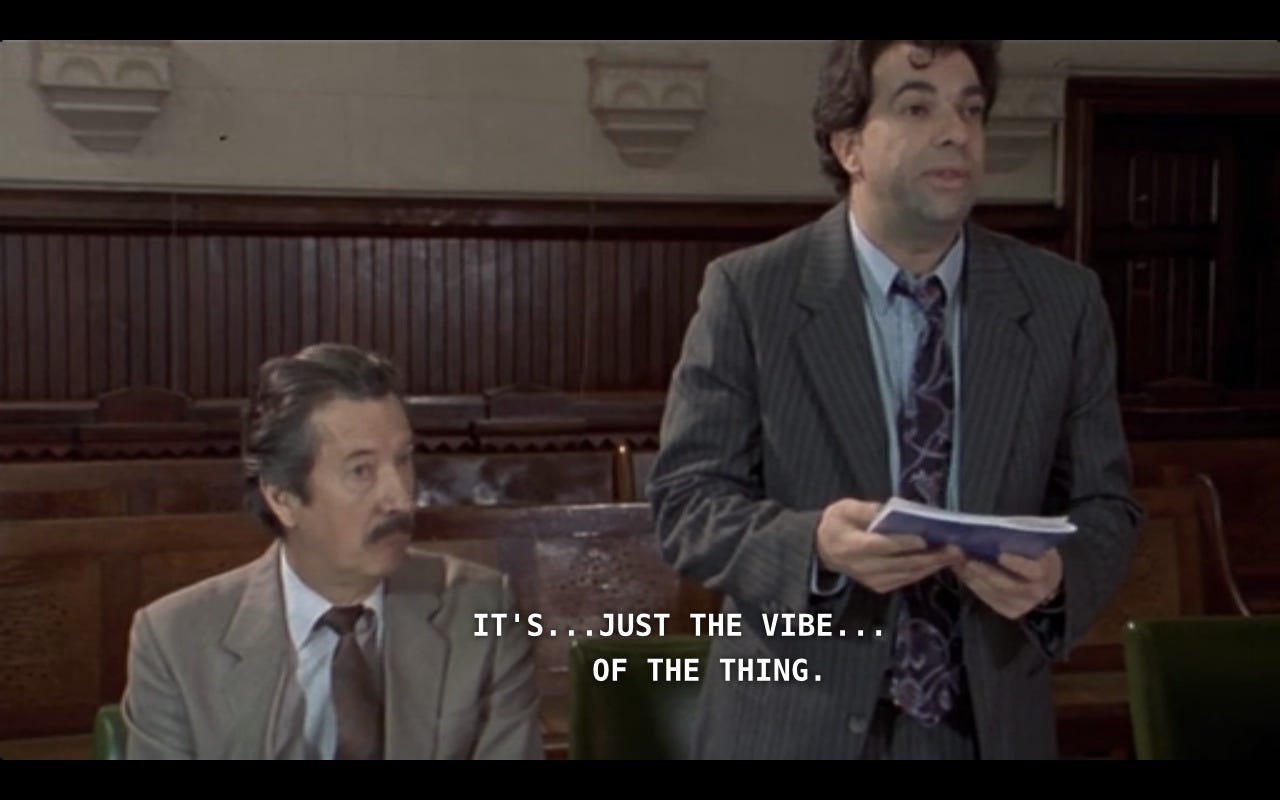

Eyeballing LLM outputs and "feeling the vibes" is better than nothing, but it's not sufficient to make your product safe.

First off, a huge thank you to everyone who joined following my inaugural post! We've grown to 74 subscribers, and I'm feeling the good vibes—fitting, since that's what we're diving into in this edition of the newsletter. So tune in, turn on, and please share your thoughts on measuring AI experiments in the comments below!

I’m about to embark on a project where I’ll help run a wide range of experiments with generative AI. It’s an exciting way to help the media industry, and I’ll be able to say more about it soon. But ever since we won the project, I’ve had something on my mind – it may not be the sexiest topic, but it’s utterly essential.

I’m talking about measurement, benchmarks and assessments. How do you measure the quality of your AI product, especially if you’re a smaller organisation without access to a data science team?

In “traditional” ML, measurement is a relatively settled topic, with a standard set of techniques and metrics to assess quality. But measuring LLMs is more nascent, and the techniques used to assess classifiers and regression models won’t always be sufficient. I’ll explain why.

Tried-and-true measurement

At Microsoft, my job was to work with a team of data scientists and engineers to build and improve content moderation classification algorithms. Unlike LLMs, these models were usually intended for a single task.

When we decided to build a new classifier, we would start by creating a clear definition of a type of “bad” content we wanted to detect. One example of this would be “clickbait”. It’s kind of a bad example because no-one can ever agree on the definition of clickbait… but I digress.

We’d create a set of detailed guidelines describing the features of the content and have a team of subject-matter experts (usually ex-journalists) label a “ground truth” dataset. This set of 100-300 examples formed the backbone of our quality assessments.

We would then train a largre number of human judges on the guidelines and have them label many thousands of content examples, ideally with an even spread of positive and negative class examples (so in this case, 50% clickbait and 50% not clickbait, with all types of clickbait well represented in the data).

This data was then used to train the model, and the model was assessed against the ground-truth dataset using standard machine-learning metrics. I won’t try to explain them here, but they have names like Precision, Recall, F1 Score and False Positive Rate. They are clear, quantitative and relatively easy to understand.

Depending on the kinds of problems you saw, you could then refine the guidelines and gather more data to iteratively improve the model. Rinse and repeat until model reaches an acceptable level of fidelity.

What’s Different About LLMs

LLMs can also be assessed using metrics like Precision and Recall, but robust assessments are more complicated for a number of reasons.

You may have seen the standard benchmarks for overall LLM performance like MMLU (Massive Multitask Language Understanding) - there are publicly available leaderboards the track many of these. While benchmarks can be useful in selecting an LLM, unless there is a domain-specific leaderboard for your use case, these measures are likely too general to be predictive about the end-to-end success of your system.

LLMs are huge models, some with billions of parameters. Even the people who build them don’t entirely understand how they work. This makes their outputs difficult to interpret. The process of reliably eliminating errors from an LLM’s output is difficult, as it may be impossible to understand why the model behaves how it does or reaches conclusions.

And while a simpler classifier might be used to solve a single problem, LLMs can be applied to a very broad range of problems, from writing a press release, to coding a website, to stripping structured data out of a PDF and transferring it to an Excel spreadsheet. Each domain in which an LLM solution is applied will typically need its own set of benchmarks.

Adding to the complexity, LLMs are highly sensitive to the nuances and context of language, which leads to variability in their outputs. This sensitivity makes it difficult to standardize tests that accurately measure the model's performance across different contexts or subtle linguistic shifts.

In addition to the clear and quantitative metrics used in traditional machine learning, assessing an LLM could require metrics such as coherence, relevancy and fluency that are more subjective, potentially requiring time-consuming and expensive labelling from human experts to both create ground-truth datasets and assess results.

Then there is safety. If your use case is based on facts rather than creativity, you need to ensure that your system is not hallucinating (or at least hallucinating rarely). If you’ve used Retrieval Augmented Generation (RAG) to ground your LLM’s outputs in your own data (for example, your internal company policies for an employee chatbot), it’s recommended to measure additional metrics to ensure that the outputs are faithful to the source data and relevant to the users.

You also need to assume that some users will try to break or misuse the system to produce weird, inappropriate or harmful outputs. Never underestimate the mischief that the internet can visit upon your innocent little product.

Finally, even after you’ve completed your initial “offline” assessment of the model in a test environment, you’ll need ongoing “online” monitoring for issues in your system, ideally through automated metrics. These dashboards track how users are interacting with your system, the average length of prompts, the number of tokens you’re using, the latency in your system (i.e. how long it takes to return responses), and much more. Sudden variances need to be checked out and the system needs to be tracked over time to prevent it degrading.

For a fantastic in-depth read on different metrics and techniques for evaluating LLM systems, check out this article from Microsoft ML scientist Jane Huang.

What about those vibes?

If you’re thinking that sounds like a lot of work, take heart. Depending on the level of risk associated with your application, you may only need to do some of these things. For example, if you’re just using an LLM to brainstorm ideas and edit your own copy, this kind of robust testing would be completely over-the-top.

The most basic forms of assessment are ad-hoc tests jokingly referred to as “vibe checks” in the industry. These involve a human eyeballing the outputs of a model to ensure that it meets expectations. It’s actually a crucial and legitimate step in the development process that can help identify obvious problems. It’s also very fast and allows for rapid iteration to fix issues. But it has some big drawbacks – the testing will typically be narrow, using a small sample size, and is likely to be affected by the preferences and biases of the vibe checkers.

This is unfortunately where a lot of testing stops, particularly for smaller organisations that can’t afford to hire a team of data scientists. For any scaled user-facing application, this is a big risk - I can’t say for certain, but I imagine that certain recent AI controversies would have been prevented if testing went further than just vibes.

A step up from the vibe check is more thorough and structured human-in-the-loop testing. For example, you might create a set of prompts that you expect will mirror real user interactions and gather responses from your system that are reviewed by human judges. But the cost and time involved in the human assessments can be considerable.

Another popular technique is to use a huge frontier LLM to assess the outputs of smaller language models. For example, you might use Google’s Gemini 1.5 Pro to assess the outputs of a model that uses Gemini 1.5 Flash, or Claude 3 Opus to assess GPT 3.5. Smaller models are cheaper to run, so this set-up has the advantage of using the most performant models to ensure quality, while keeping overall costs down.

The reaction of many people when they hear this is terror – machines assessing machines! There are risks associated with this method, and even the most advanced models hallucinate and make basic errors. But I’ve personally worked on at-scale systems where GPT 4 outperformed human judges by a considerable margin. Humans tend to make a wide variety of idiosyncratic mistakes in their work, whereas models (even LLMs) tend to fail in more predictable ways, providing a path to improving performance through prompt engineering.

So much more to say

This is not a topic that can be covered off in a single newsletter. In fact, it’s already the subject of textbooks. But evals for LLMs are an evolving field that will change quickly, hopefully leading to more robust and standardised testing methods in the near future.

If you made it to the end – I congratulate you! Next week I’m planning to bring you a chat with a special guest from one of Australia’s most prominent academic AI institutes.

And if you’ve been developing your own LLM-based products, I’d love to hear how you make sure that you’re releasing accurate, safe and performant systems into the wild. Make a comment!

This is such a vital and difficult area - the reasons for the difficulty go to the heart of the tech, it’s not peripheral. I am starting to believe this first generation of llm is really quite limited and we are waiting on new breakthroughs. Still amazing, but so are word processors.

Hey Shaun this is terrific - Ive liked them all so far but like this one the best. Great explanation of our processes and metrics we used at MSFT for content moderation classifiers (I still dream of defining clickbait). And I've wondered about using classifiers to analyse LLM responses (ie at scale eg >20000 prompt responses). Using machines to work with machines is almost certainly the pathy we will be staying on but keeping a human in the loop, and eyeballing, remain crucial